Apple today unveiled new Apple Intelligence features that will elevate the user experience across iPhone, iPad, Mac, Apple Watch, and Apple Vision Pro.

Apple Intelligence unlocks new ways for users to communicate with features like Live Translation; do more with what’s on their screen with updates to visual intelligence; and express themselves with enhancements to Image Playground and Genmoji.

Additionally, Shortcuts can now tap into Apple Intelligence directly, and developers will be able to access the on-device large language model at the core of Apple Intelligence, giving them direct access to intelligence that is powerful, fast, built with privacy, and available even when users are offline.

“Last year, we took the first steps on a journey to bring users intelligence that’s helpful, relevant, easy to use, and right where users need it, all while protecting their privacy. Now, the models that power Apple Intelligence are becoming more capable and efficient, and we’re integrating features in even more places across each of our operating systems,” said Craig Federighi, Apple’s senior vice president of Software Engineering.

“We’re also taking the huge step of giving developers direct access to the on-device foundation model powering Apple Intelligence, allowing them to tap into intelligence that is powerful, fast, built with privacy, and available even when users are offline. We think this will ignite a whole new wave of intelligent experiences in the apps users rely on every day. We can’t wait to see what developers create.”

Apple Intelligence features will be coming to eight more languages by the end of the year: Danish, Dutch, Norwegian, Portuguese (Portugal), Swedish, Turkish, Chinese (traditional), and Vietnamese.

Live Translation Breaks Down Language Barriers

For those moments when a language barrier gets in the way, Live Translation can help users communicate across languages when messaging or speaking.

The experience is integrated into Messages, FaceTime, and Phone, and enabled by Apple-built models that run entirely on device, so users’ personal conversations stay personal.

In Messages, Live Translation can automatically translate messages. If a user is making plans with new friends while traveling abroad, their message can be translated as they type, delivered in the recipient’s preferred language, and when they get a response, each message can be instantly translated.

On FaceTime calls, a user can follow along with translated live captions while still hearing the speaker’s voice. And when on a phone call, the translation is spoken aloud throughout the conversation.

Updates to Genmoji and Image Playground

Genmoji and Image Playground provide users with even more ways to express themselves. In addition to turning a text description into a Genmoji, users can now mix together emoji and combine them with descriptions to create something new.

When users make images inspired by family and friends using Genmoji and Image Playground, they have the ability to change expressions or adjust personal attributes, like hairstyle, to match their friend’s latest look.

In Image Playground, users can tap into brand-new styles with ChatGPT, like an oil painting style or vector art. For moments when users have a specific idea in mind, they can tap Any Style and describe what they want.

Image Playground sends a user’s description or photo to ChatGPT and creates a unique image. Users are always in control, and nothing is shared with ChatGPT without their permission.

Visual Intelligence Helps Users Search and Take Action

Building on Apple Intelligence, visual intelligence extends to a user’s iPhone screen so they can search and take action on anything they’re viewing across their apps.

Visual intelligence already helps users learn about objects and places around them using their iPhone camera, and it now enables users to do more, faster, with the content on their iPhone screen.

Users can ask ChatGPT questions about what they’re looking at on their screen to learn more, as well as search Google, Etsy, or other supported apps to find similar images and products.

If there’s an object a user is especially interested in, like a lamp, they can highlight it to search for that specific item or similar objects online.

Visual intelligence also recognizes when a user is looking at an event and suggests adding it to their calendar. Apple Intelligence then extracts the date, time, and location to prepopulate these key details into an event.

Users can access visual intelligence for what’s on their screen by simply pressing the same buttons used to take a screenshot. Users will have the choice to save or share their screenshot, or explore more with visual intelligence.

Apple Intelligence Expands to Fitness on Apple Watch

Workout Buddy is a first-of-its-kind workout experience on Apple Watch with Apple Intelligence that incorporates a user’s workout data and fitness history to generate personalized, motivational insights during their session.

To offer meaningful inspiration in real time, Workout Buddy analyzes data from a user’s current workout along with their fitness history, based on data like heart rate, pace, distance, Activity rings, personal fitness milestones, and more.

A new text-to-speech model then translates insights into a dynamic generative voice built using voice data from Fitness+ trainers, so it has the right energy, style, and tone for a workout. Workout Buddy processes this data privately and securely with Apple Intelligence.

Workout Buddy will be available on Apple Watch with Bluetooth headphones, and requires an Apple Intelligence-supported iPhone nearby. It will be available starting in English, across some of the most popular workout types: Outdoor and Indoor Run, Outdoor and Indoor Walk, Outdoor Cycle, HIIT, and Functional and Traditional Strength Training.

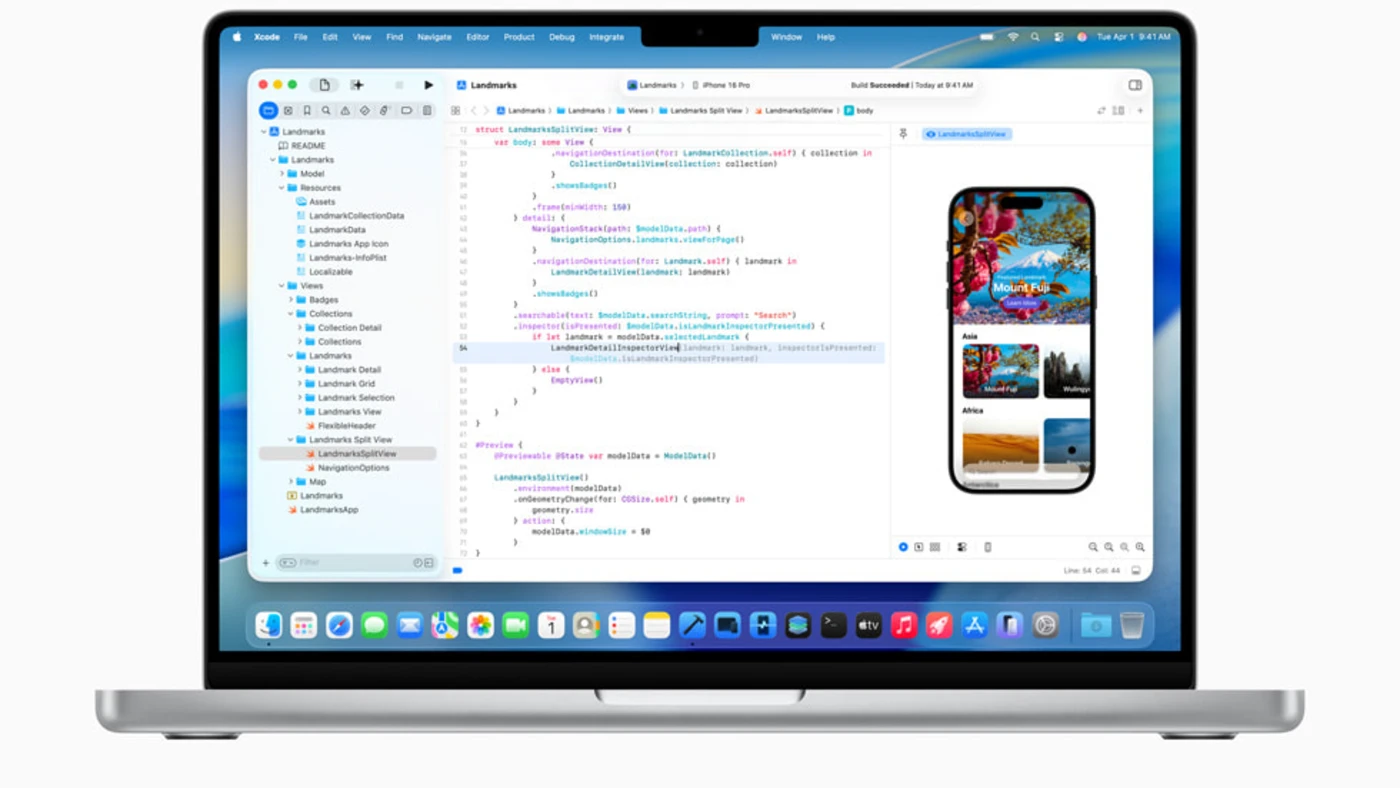

Apple Intelligence On-Device Model Now Available to Developers

Apple is opening up access for any app to tap directly into the on-device foundation model at the core of Apple Intelligence.

With the Foundation Models framework, app developers will be able to build on Apple Intelligence to bring users new experiences that are intelligent, available when they’re offline, and that protect their privacy, using AI inference that is free of cost.

For example, an education app can use the on-device model to generate a personalized quiz from a user’s notes, without any cloud API costs, or an outdoors app can add natural language search capabilities that work even when the user is offline.

The framework has native support for Swift, so app developers can easily access the Apple Intelligence model with as few as three lines of code. Guided generation, tool calling, and more are all built into the framework, making it easier than ever to implement generative capabilities right into a developer’s existing app.

Shortcuts Get More Intelligent

Shortcuts are now more powerful and intelligent than ever. Users can tap into intelligent actions, a whole new set of shortcuts enabled by Apple Intelligence. Users will see dedicated actions for features like summarizing text with Writing Tools or creating images with Image Playground.

Now users will be able to tap directly into Apple Intelligence models, either on-device or with Private Cloud Compute, to generate responses that feed into the rest of their shortcut, maintaining the privacy of information used in the shortcut.

For example, a student can build a shortcut that uses the Apple Intelligence model to compare an audio transcription of a class lecture to the notes they took, and add any key points they may have missed.

Users can also choose to tap into ChatGPT to provide responses that feed into their shortcut.

Apple Intelligence is even more deeply integrated into the apps and experiences that users rely on every day.

Reminders

The most relevant actions in an email, website, note, or other content can now be identified and automatically categorized in Reminders.

Apple Wallet

Apple Wallet can now identify and summarize order tracking details from emails sent from merchants or delivery carriers.

This works across all of a user’s orders, giving them the ability to see their full order details, progress notifications, and more, all in one place.

Messages

Users can create a poll for anything in Messages, and with Apple Intelligence, Messages can detect when a poll might come in handy and suggest one.

In addition, Backgrounds in the Messages app lets a user personalize their chats with stunning designs, and they can create unique backgrounds that fit their conversation with Image Playground.

Availability

All of these new features are available for testing starting today through the Apple Developer Program at developer.apple.com, and a public beta will be available through the Apple Beta Software Program next month.