Microsoft has announced a new tool that it claims can detect the presence of deepfake software in images and video as it seeks to tackle disinformation online.

Deepfakes, or synthetic media, are photos, videos, or audio files manipulated by artificial intelligence (AI). And they’re becoming increasingly hard to detect.

Used maliciously, deepfake technology can be used to make people appear to say things they didn’t or appear to be in place they weren’t, posing an emerging threat to public figures like politicians, but also to businesses when in the hands of sophisticated phishing scammers.

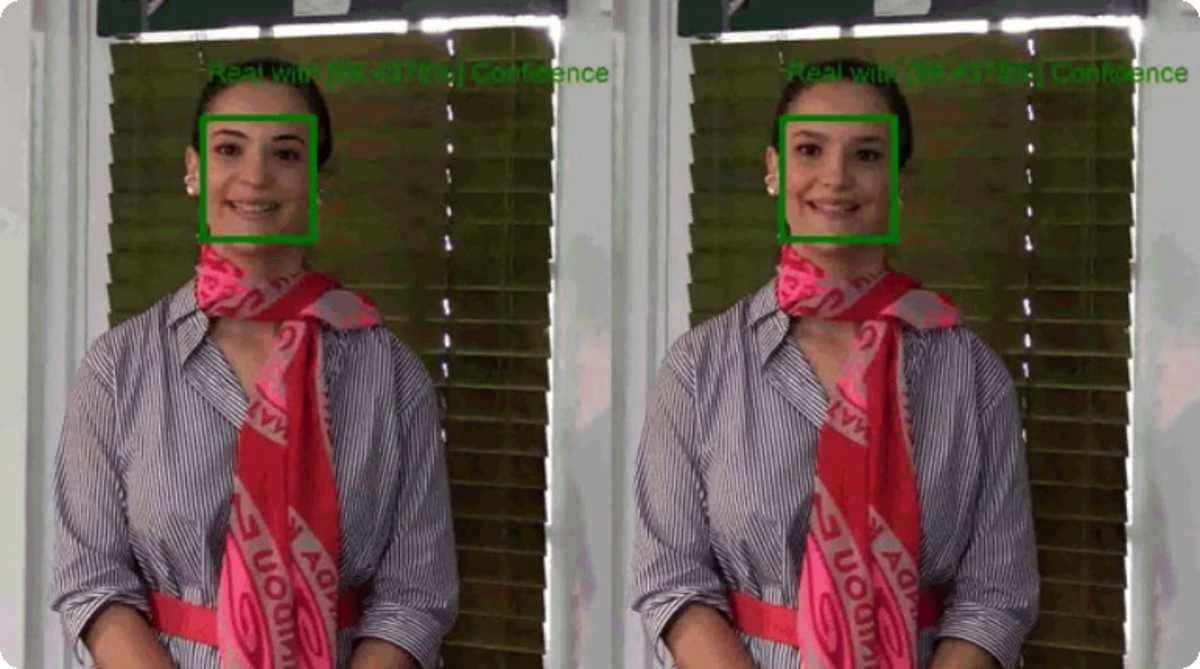

The tech giant’s new software can rank media with a percentage chance or confidence score to give an indication of how likely it is the material has been artificially created. Microsoft hopes the solution can help to combat disinformation on the web “in the short run,” especially with the US election coming up in November.

Developed alongside Microsoft’s responsible AI team and AI ethics advisory board, the tool works by detecting the blending boundary of the deepfake and subtle fading or greyscale elements that might not be detectable by the human eye.

But the firm also notes that with deepfakes being generated by AI that continues to learn, it is “inevitable” that they will begin to beat conventional detection technology, as Microsoft stated:

We expect that methods for generating synthetic media will continue to grow in sophistication. As all AI detection methods have rates of failure, we have to understand and be ready to respond to deepfakes that slip through detection methods.

Thus, in the longer term, we must seek stronger methods for maintaining and certifying the authenticity of news articles and other media. There are few tools today to help assure readers that the media they’re seeing online came from a trusted source and that it wasn’t altered.

Deepfakes were recently regarded as the most dangerous threat posed by AI technology by a panel of experts in a report published by University College London (UCL).

As deepfake technology continues to advance, specialists said that fake content would become more difficult to identify and stop, and could assist bad actors in a variety of aims, from discrediting a public figure to extracting funds by impersonating a couple’s son or daughter in a video call.

Such uses could ultimately undermine trust in audio and visual evidence, the authors of the report said, which could have great societal harm.