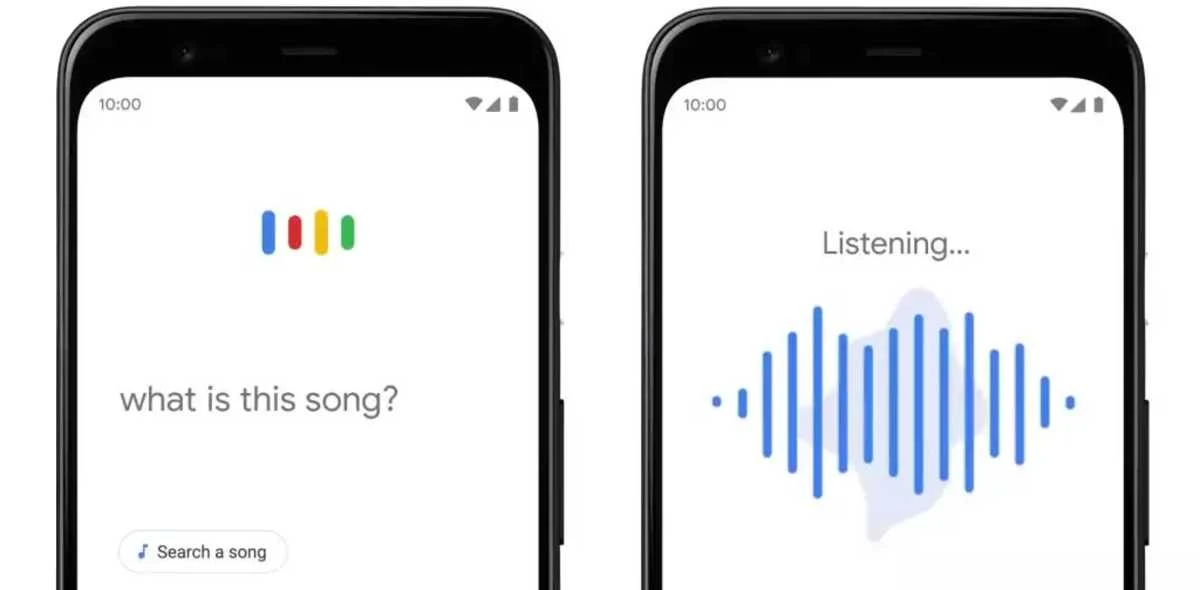

Starting today, you can hum, whistle or sing a melody to Google to search for a song. On your mobile device, open the latest version of the Google app or find your Google Search widget, tap the mic icon and say “what’s this song?” or click the “Search a song” button. Then start humming for 10-15 seconds.

On Google Assistant, it’s just as simple. Say “Hey Google, what’s this song?” and then hum the tune. This feature is currently available in English on iOS, and in more than 20 languages on Android. Google says it hopes to expand this to more languages in the future.

After you’re finished humming, Google’s machine learning algorithm helps identify potential song matches. And don’t worry, you don’t need perfect pitch to use this feature. Google will show you the most likely options based on the tune. Then you can select the best match and explore information on the song and artist, view any accompanying music videos or listen to the song on your favourite music app, find the lyrics, read analysis and even check out other recordings of the song when available.

The hum-to-search feature is rolling out starting today in Google Search and on the Google Assistant.

How does it work?

So how does it work? An easy way to explain it is that a song’s melody is like its fingerprint: They each have their own unique identity. We’ve built machine learning models that can match your hum, whistle or singing to the right “fingerprint.”

When you hum a melody into Search, Google’s machine learning models transform the audio into a number-based sequence representing the song’s melody. The models are trained to identify songs based on a variety of sources, including humans singing, whistling or humming, as well as studio recordings.

The algorithms also take away all the other details, like accompanying instruments and the voice’s timbre and tone. What we’re left with is the song’s number-based sequence, or the fingerprint.

Google compares these sequences to thousands of songs from around the world and identify potential matches in real time. For example, if you listen to Tones and I’s “Dance Monkey,” you’ll recognize the song whether it was sung, whistled, or hummed.

Similarly, Google’s machine learning models recognize the melody of the studio-recorded version of the song, which can be used to match it with a person’s hummed audio.